M2RAI – Multimodal and Multitask Responsible AI: Risk Control, Audit Tools, and Biometric Applications (2025-2028)

Title: Multimodal and Multitask Responsible AI: Risk Control, Audit Tools, and Biometric Applications (M2RAI).

Type: Spanish National R&D Program

Code: PID2024-160053OB-I00

Funding: ca. 258 Keuros

Participants: Univ. Autonoma de Madrid, Univ. politécnica de Madrid,

Period: September 2025 – August 2028

Principal investigator: Aythami Morales and Julian Fierrez

Objectives:

- To develop new knowledge and general tools to audit Artificial Intelligence (AI) systems, enabling their safe, ethical, and lawful integration into society in alignment with the European Union’s AI regulations. Special attention will be given to AI systems based on data-driven machine learning, e.g.: biometric systems, LLMs, and multimodal generative AI.

- Research and development of new multimodal and multitask learning algorithms that seamlessly integrate human-aligned objectives with traditional performance optimization strategies. While current AI models are often designed to maximize accuracy or efficiency in predefined tasks, they frequently overlook critical human-centered considerations such as fairness, interpretability, and ethical alignment. Our proposed research aims to bridge this gap by creating new machine learning algorithms capable of optimizing multiple objectives simultaneously, balancing task-specific performance with societal and user-centric priorities.

- To apply the tools and algorithms developed in the two previous general objectives to 4 distinct use cases in impactful domains: sport/rehabilitation, social AI, text analysis/generation, and digital onboarding (including smartphone-based biometrics and ID document authentication). This objective aims to validate the effectiveness of the proposed AI auditing tools and multitask learning algorithms by addressing real-world challenges in these areas while ensuring compliance with ethical, safety, and regulatory standards. This practical objective implies also the research and development of the necessary enabling technologies to achieve and experiment on the state of the art of each use case scenario (exploiting the know-how and current technologies available in the research team).

BIOPROCTORING – Plataforma de Reconocimiento Biométrico y Análisis de Comportamiento durante Evaluaciones Online (2022-2025)

Title: BIO-PROCTORING: Plataforma de Reconocimiento Biométrico y Annálisis de Comportamiento durante Evaluaciones Online.

Funding: ca. 240 Keuros

Participants: Univ. Autonoma de Madrid.

Period: October 2022 – October 2024

Principal investigators: Aythami Morales and Julián Fierrez

Objectives:

- Design and delepment of a multimodal biometric and behavioral plataform for online courses. The platform will include face recognition technologies, keystroke dynamic recognition and behavior models o improve the thrustworthy and security during online evaluations.

INSPIRA-CM – Identificación de Mecanismos, Biomarcadores e Intervenciones mediante abordajes Computacionales (2023-2027)

Title: INSPIRA-CM – Identificación de Mecanismos, Biomarcadores e Intervenciones en comorbilidad en Enfermedades Respiratorias Hipoxémicas mediante abordajes preclínicos, clínicos y Computacionales.

Type: Programa de I+D en Biomedicina 2022 (CM)

Code: TP2022/BMD-7224

Funding: ca. 55 Keuros

Participants: Univ. Autonoma de Madrid, Univ. Complutense de Madrid, Fundación para la Investigación Biomédica del Hospital Universitario La Paz

Period: January 2023 – December 2027

Principal investigator: Aythami Morales

Objectives:

- Design of computational models for the generation of synthetic biomedical data. Within this objective, techniques will be developed to automatically generate realistic and balanced data sets. Advanced machine learning techniques (e.g., adversarial generative architectures and/or deep reinforcement learning) supported by expert knowledge of the consortium will be used. The goal is to be able to understand the natural processes involved in data related to EPOC and AOS, and then model those processes through automatic algorithms and generate new data with properties similar to real ones..

- Development of image analysis tools in infrared spectrum to support biomarker detection in EPOC and AOS. The use of Computer Vision technologies is proposed for the enhancement and extraction of patterns related to brown adipose tissue images. The developments will be supported by the great advances in this field, dominated in recent years by algorithms such as Deep Convolutional Networks and Transformers more recently. Initially, it is proposed to adapt state-of-the-art models to the infrared domain, for which auxiliary data will be used that allow pre-adaptation and later specific adjustment to adipose tissue images based on the expert knowledge of the consortium.

HumanCAIC: Enhanced Behavioral Biometrics for Human-Centric AI in Context (2022-2025)

Title: Enhanced Behavioral Biometrics for Human-Centric AI in Context (HumanCAIC)

Type: Spanish National R&D Program

Code: TED2021-131787B-I00

Funding: ca. 314 Keuros

Participants: Univ. Autonoma de Madrid and Univ. Politecnica de Madrid

Period: December2022 – November 2024

Principal investigator(s): Julian Fierrez and Aythami Morales

Objectives:

- New Human-Centric AI developments based on improved biometrics and behavior understanding. HumanCAIC will advance the state-of-the-art in automatic behavior understanding by: i) exploiting the latest advanced on multimodal fusion of heterogeneous sources of information; ii) incorporating human feedback and human intervention to guide the learning process of automatic decision-making algorithms; and iii) adding context features to improve the domain adaptation of general models to specific applications.

- Developments on privacy-preserving, transparent, and discrimination-aware machine learning technologies. HumanCAIC proposes novel machine learning strategies designed to incorporate Human-Centric requirements as learning objectives of the training processes of behavioral models.

- Development of novel trustworthy Human-AI interfaces designed to reduce the gap between the social science and the AI developments.

- We will evaluate the developments of HumanCAIC in two case studies focused on: i) digital education; and ii) digital health.

BBforTAI: Biometrics and Behavior for Unbiased and Trustworthy AI with Applications (2022-2024)

Title: Biometrics and Behavior for Unbiased and Trustworthy AI with Applications

Type: Spanish National R&D Program

Code: PID2021-127641OB-I00

Funding: ca. 273 Keuros

Participants: Univ. Autonoma de Madrid and Univ. Politecnica de Madrid

Period: January 2022 – December 2024

Principal investigator(s): Julian Fierrez and Aythami Morales

Objectives:

- The development of new methods for measuring and combating biases in multimodal AI frameworks. In this sense, the aim is to extend existing bias analysis and explainability studies in AI, as well as to develop bias prevention methods that are general enough to be applied to different data-driven learning architectures regardless of the nature of the data.

- Develop new methods for improving the trust in multimodal AI systems, by integrating the developments in objective 1 with recent advances in secure and privacy-preserving AI.

- Develop core technologies for incorporating the main advances in the previous points into the value chain of practical application areas of social importance. In this regard, we propose four case studies based on some pillars of the welfare society: (i) e-learning platforms; (ii) multimodal biometrics for e-health; (iii) equal opportunities in the access to the labour market; and (iv) media and content analytics.

- The technical cooperation with ELSA and social/human experts to generate new knowledge on the behavior of people in the contexts portraited in BBforTAI. The project aims to contribute to legal developments, standards, and best practices of use of AI systems, as well as analyzing human behavior patterns in the four cases studied in the previous point.

Selected Publications:

- I. Serna, A. Morales, J. Fierrez and N. Obradovich, “Sensitive Loss: Improving Accuracy and Fairness of Face Representations with Discrimination-Aware Deep Learning”, Artificial Intelligence, April 2022. [PDF] [DOI] [Dataset] [Tech]

- R. Daza, D. DeAlcala, A. Morales, R. Tolosana, R. Cobos and J. Fierrez, “ALEBk: Feasibility Study of Attention Level Estimation via Blink Detection applied to e-Learning”, in AAAI Workshop on Artificial Intelligence for Education (AI4EDU), Vancouver, Canada, February 2022. [PDF] [DOI] [Dataset]

- I. Serna, D. DeAlcala, A. Morales, J. Fierrez and J. Ortega-Garcia, “IFBiD: Inference-Free Bias Detection”, in AAAI Workshop on Artificial Intelligence Safety (SafeAI), CEUR, vol. 3087, Vancouver, Canada, February 2022. [PDF] [DOI] [Dataset] [Code]

TRESPASS: Training in Secure and Privacy-preserving Biometrics (2020-2024)

Title: Training in Secure and Privacy-preserving Biometrics

Type: H2020 Marie Curie Initial Training Network

Code: H2020-MSCA-ITN-2019-860813

Funding: ca. 502 Keuros

Participants: UAM, Univ. Applied Sciences H-DA (Germany), Univ. Groningen (Netherlands), IDIAP (Switzerland), Chalmers Univ. (Sweden), Katholieke Univ. Leuven (Belgium)

Period: January 2020 – December 2023

Principal investigator(s): Massimiliano Todisco (Julian Fierrez and Aythami Morales for UAM)

Objectives:

- To combat rising security challenges, the global market for biometric technologies is growing at a fast pace. It includes all processes used to recognise, authenticate and identify persons based on biological and/or behavioural characteristics.

- The EU-funded TReSPAsS-ETN project will deliver a new type of security protection (through generalised presentation attack detection (PAD) technologies) and privacy preservation (through computationally feasible encryption solutions).

- The TReSPAsS-ETN Marie Sklodowska-Curie early training network will couple specific technical and transferable skills training including entrepreneurship, innovation, creativity, management and communications with secondments to industry.

Selected publications:

- J. Hernandez-Ortega, J. Fierrez, L. F. Gomez, A. Morales, J. L. Gonzalez-de-Suso and F. Zamora-Martinez, “FaceQvec: Vector Quality Assessment for Face Biometrics based on ISO Compliance”, in IEEE/CVF Winter Conf. on Applications of Computer Vision Workshops (WACVw), Waikoloa, HI, USA, January 2022. [PDF] [DOI] [Code] [Tech]

- L. F. Gomez, A. Morales, J. R. Orozco-Arroyave, R. Daza and J. Fierrez, “Improving Parkinson Detection using Dynamic Features from Evoked Expressions in Video”, in IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops (CVPRw), June 2021, pp. 1562-1570. [PDF] [DOI]

- O. Delgado-Mohatar, R. Tolosana, J. Fierrez and A. Morales, “Blockchain in the Internet of Things: Architectures and Implementation”, in IEEE Conf. on Computers, Software, and Applications (COMPSAC), Madrid, Spain, July 2020. [PDF] [DOI]

Web: https://www.trespass-etn.eu/

AI4Food: Inteligencia Artificial para la Prevención de Enfermedades Crónicas a través de una Nutrición Personalizada (2021-2024)

Title: Inteligencia Artificial para la Prevención de Enfermedades Crónicas a través de una Nutrición Personalizada

Type: CAM Synergy Program

Code: Y2020/TCS6654

Funding: ca. 310 Keuros UAM (620 Keuros in total)

Participants: Univ. Autonoma de Madrid and IMDEA-Food Institute

Period: July 2021 – June 2024

Principal investigator(s): Javier Ortega-García (UAM technical lead: Aythami Morales)

Objectives:

- AI4Food will develop a series of enabling technologies to process, analyze and exploit a large number of biometric signals indicative of individual habits, phenotypic and molecular data.

- AI4Food will integrate all this information and develop new machine learning algorithms to generate a paradigm shift in the field of nutritional counselling.

- AI4Food technology will allow a more objective and effective assessment of the individual nutritional status, helping experts to propose changes towards healthier eating habits from general solutions to personalized solutions that are more effective and sustained over time for the prevention of chronic diseases.

- AI4Food will also advance knowledge on three questions using these new technologies: 1) WHICH are the sensor dependent and sensor independent biomarkers that work best for nutritional modelling of human behavior and habits? 2) WHEN, that is, under what circumstances (e.g., user habits, signal quality, context, phenotypic and molecular data), and 3) HOW can we best leverage those signals and context information to improve nutritional recommendations.

BiBECA: Biometrics and Behavior for Context-Aware and Secure Human-Computer Interaction (2019-2021)

Title: Biometrics and Behavior for Context-Aware and Secure Human-Computer Interaction

Type: Spanish National R&D Program

Code: RTI2018-101248-B-I00

Funding: ca. 243 Keuros

Participants: Univ. Autonoma de Madrid

Period: January 2019 – December 2021

Principal investigator(s): Julian Fierrez and Aythami Morales

Objectives:

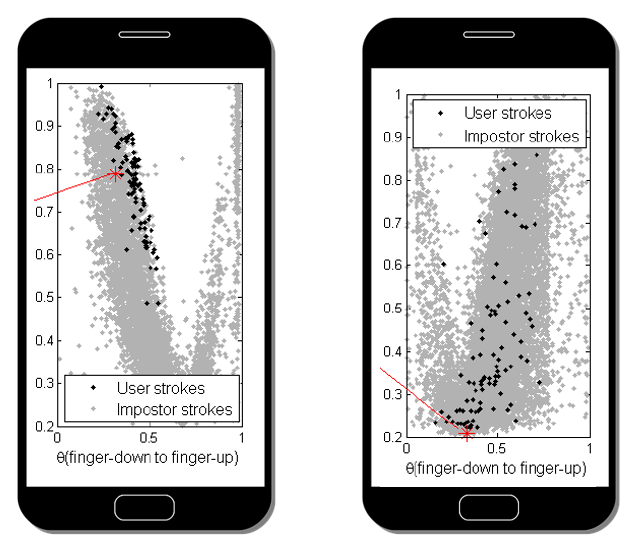

- Generating a better understanding about the nature of biometrics in terms of distinctiveness, permanence, and relation to stress levels and emotions. New methods for analyzing and modelling complex yet structured relations on heterogeneous data will be developed in BIBECA.

- Design of robust algorithms in terms of biometric representation and matching from uncooperative users in unconstrained and varying scenarios. BIBECA will contribute in this topic to: innovative adaptive fusion schemes, and public databases with heterogeneous continuous biometrics, in realistic mobile and desktop setups.

- Experimental exploration and technology development towards new biometrics applications: mobile authentication and ubiquitous biometrics.

- Understand and improve the usability of biometric systems.

- Understand and improve the security in biometric systems. BIBECA will track the advances in security against attacks and privacy preservation in biometric systems, and will adapt and investigate those advances for the kind of human interaction signals to be explored during the project implementation.

Selected Publications:

A. Morales, J. Fierrez, R. Vera-Rodriguez, R. Tolosana, “SensitiveNets: Learning Agnostic Representations with Application to Face Images,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43 (6), pp. 2158-2164, 2021.

A. Morales, J. Fierrez, A. Acien, R. Tolosana, I. Serna, “SetMargin Loss applied to Deep Keystroke Biometrics with Circle Packing Interpretation,” Pattern Recognition, vol. 122 (108283), 2022.

A. Ortega, J. Fierrez, A. Morales, Z. Wang, M. Cruz, C. Alonso, T. Ribeiro,”Symbolic AI for XAI: Evaluating LFIT Inductive Programming for Explaining Biases in Machine Learning“, Computers, 10 (11), pp. 154, 2021.

A. Acien, A. Morales, J.V. Monaco, R. Vera-Rodriguez, J. Fierrez, “TypeNet: Deep Learning Keystroke Biometrics,” IEEE Transactions on Biometrics, Behavior, and Identity Science, vol. 4 (1), pp. 57-70, 2022.

NEUROMETRICS: New Biomarkers to Characterize Neurodegenerative Diseases (2017-2018)

Founded by: PROYECTOS DE COOPERACIÓN INTERUNIVERSITARIA UAM-BANCO SANTANDER con América Latina

The human body is constantly communicating information about our health. This information can be captured and processed to model the cognitive and neuromotorhealth of the users. Traditionally, such measurements are taken manually by Physicians during isolated visits. Biometric signal processing involves the analysis of these measurements to provide useful information upon which clinicians can make decisions. This project has explored new ways to process these signals using a variety of sensors and new artificial intelligence algorithms. It is time to redefine some of the traditional biomarkers to exploit such a new technology capabilities.

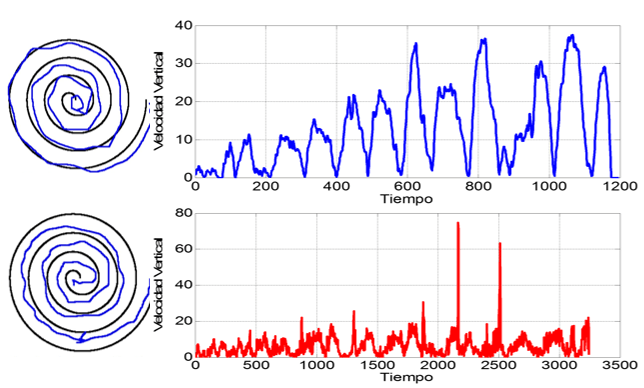

Handwriting dynamics: The handwriting is a behavioural biometric trait which comprises neuromotor characteristics of the user (e.g. our brain and muscles among other factors define the way we write) as well as socio-cultural influence (e.g. the Western and Asian styles). The dynamics of handwriting include rich patterns related with velocity, acceleration or angular information, among others.

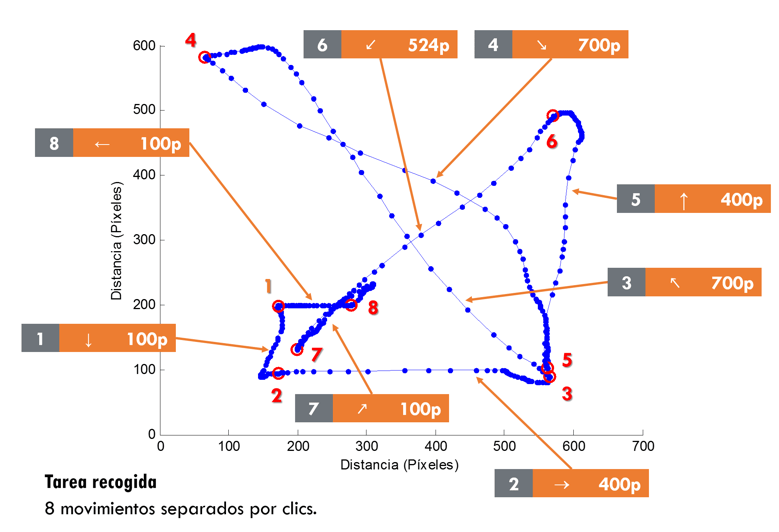

Mouse dynamics: Mouse dynamics are derived from the user-mouse interaction. The mouse trajectories include information related with neuromotor capabilities of the user that can be derived from velocity profiles and precision. There is a large room for research focus on the development of specific task to reveal the user state.

Touch dynamics: The great popularity of smartphones/tablets and the increase in their use in everyday applications has led to develop new applications based on touch interactions with the screens. Keystroking, handwriting or mouse have been replaced by simple touch actions in our device interaction.

Selected Publications:

L. F. Gomez, A. Morales, J. R. Orozco-Arroyave, R. Daza, J. Fierrez, “Improving Parkinson Detection Using Dynamic Features From Evoked Expressions in Video,” IEEE/CVF Conference on Computer Vision and Pattern Recognition – First International Workshop on Affective Understanding in Video (CVPR-AUVi), pp. 1562-1570, 2021. [pdf]

Luis F. Gomez-Gomez, A. Morales, J. Fierrez, J. R. Orozco-Arroyave, “Exploring Facial Expressions and Affective Domains for Parkinson Detection,” arXiv:2012.06563, 2020. [pdf]

R. Castrillon, A. Acien, J. Orozco-Arroyave, A. Morales, J. Vargas, R.Vera-Rodrıguez, J. Fierrez, J. Ortega-Garcia and A. Villegas, “Characterization of the Handwriting Skills as a Biomarker for Pa