Discrimination-aware Learning

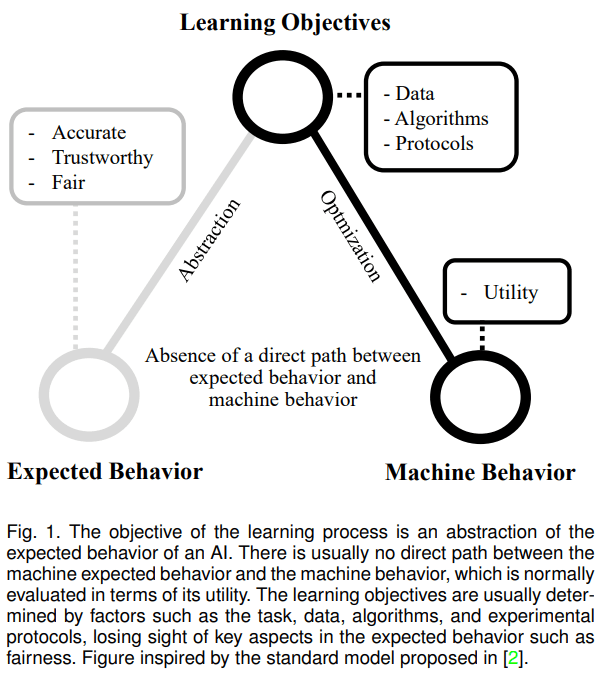

Artificial Intelligence (AI) is developed to meet human needs that can be represented in the form of objectives. To this end, the most popular machine learning algorithms are designed to minimize a loss function that defines the cost of wrong solutions over a pool of samples. This is a simple but very successful scheme that has enhanced the performance of AI in many fields such as Computer Vision, Speech Technologies, and Natural Language Processing. But this optimization of specific computable objectives may not lead to the behavior one may expect or desire from AI. International agencies, academia and industry are alerting policymakers and the public about unanticipated effects and behaviors of AI agents, not initially considered during the design phases. In this context, aspects such as trustworthiness and fairness should be included as learning objectives and not taken for granted.

SensitiveNets: Learning Agnostic Representations

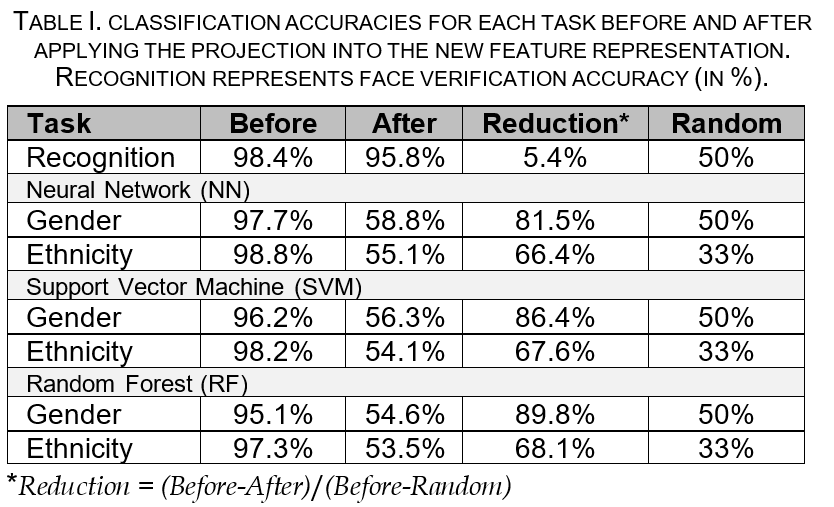

The aim of this method is to develop a new agnostic representation capable of removing certain sensitive information while maintaining the utility of the data. The proposed method, called SensitiveNets, can be trained for specific tasks (e.g. image classification), while minimizing the presence of selected covariates, both for the task at hand and in the information embedded in the trained network. These agnostic representations are expected to: i) improve the privacy of the data and the automatic process itself; and ii) eliminate the source of discrimination that we want to prevent. After incorporating privacy into the learned space with SensitiveNets, we demonstrate in our experiments that sensitive attributes cannot be exploited in subsequent processes. SensitiveNets ensure both privacy-preserving embeddings and equality of opportunity of decision-making algorithms based on such embeddings.

InsideBias: Measuring Bias in Deep Networks

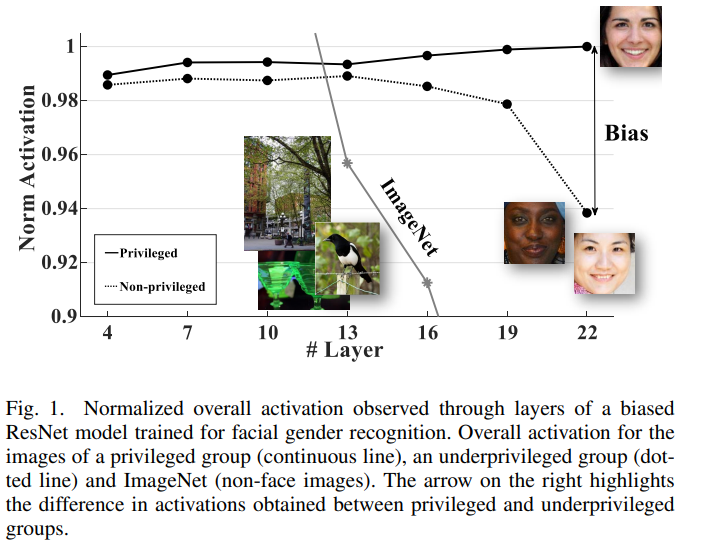

InsideBias is a novel bias detection method based on the analysis of the filters activation of deep networks. InsideBias allows to detect biased models with very few samples (only 15 in our experiments), which makes it very useful for algorithm auditing and discrimination-aware machine learning. We present a comprehensive analysis of bias effects when using an unbalanced training dataset on the features learned by the models. We show how ethnic attributes impact in the activations of gender detection models based on face images.

Selected publications thrustworthy and fairness topics:

A. Morales, J. Fierrez, R. Vera-Rodriguez, “SensitiveNets: Learning agnostic representations with application to face recognition,” IEEE Transactions on Pattern Analisys and Machine Intelligence, 2021. [pdf][GitHub][media]

A. Peña, J. Fierrez, A. Lapedriza, A. Morales, “Learning Emotional Blinded Face Representations“, IAPR Intl. Conf. on Pattern Recognition (ICPR), 2021. [pdf]

I Serna, A Peña, A Morales, J Fierrez, “InsideBias: Measuring Bias in Deep Networks and Application to Face Gender Biometrics,” IAPR Intl. Conf. on Pattern Recognition (ICPR), 2021. [pdf]

A. Ortega, J. Fierrez, T. Ribeiro, Aythami Morales, Z. Wang, “Symbolic AI for XAI: Evaluating LFIT Inductive Programming for Fair and Explainable Automatic Recruitment“, IEEE/CVF WACV21 Workshop on Explainable & Interpretable Artificial Intelligence for Biometrics (xAI4Biom), 2021. [pdf]

A. Peña, I. Serna, A. Morales, J. Fierrez, “Bias in Multimodal AI: Testbed for Fair Automatic Recruitment,” Proc. of IEEE CVPR Workshop on Fair, Data Efficient and Trusted Computer Vision, Washington, Seattle, USA, 2020. [pdf][Github]

I. Serna, A. Morales, J. Fierrez, M. Cebrian, N. Obradovich and I. Rahwan, “Algorithmic Discrimination: Formulation and Exploration in Deep Learning-based Face Biometrics,” Proc. of AAAI Workshop on Artificial Intelligence Safety (SafeAI), New York, NY, USA, February 2020. [pdf]

I. Serna, A. Morales, J. Fierrez, M. Cebrian, N. Obradovich, I. Rahwan, “SensitiveLoss: Improving Accuracy and Fairness of Face Representations with Discrimination-Aware Deep Learning,” arXiv:2004.11246, 2020. [pdf]

O. Delgado-Mohatar, R. Tolosana, J. Fierrez, A. Morales, “Blockchain in the Internet of Things: Architectures and Implementation,” Proc. of IEEE Conf. on Computers, Software, and Applications (COMPSAC), Madrid, Spain, 2020. [pdf]

I Serna, A Peña, A Morales, J Fierrez, “InsideBias: Measuring Bias in Deep Networks and Application to Face Gender Biometrics,” arXiv:2004.06592, 2020. [pdf]

A. Morales, J. Fierrez, R. Vera-Rodriguez, “SensitiveNets: Unlearning Undesired Information for Generating Agnostic Representations with Application to Face Recognition”, in Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Workshop on Fairness Accountability Transparency and Ethics in Computer Vision (CVPR FATE/CV), Long Beach, USA, 2019. [pdf]

R. Vera-Rodriguez, M. Blazquez, A. Morales, E. Gonzalez-Sosa, J. Neves and H. Proenca, “FaceGenderID: Exploiting Gender Information in DCNNs Face Recognition Systems“, Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Workshop on Bias Estimation in Face Analytics (CVPR BEFA), June 2019 (Best Paper Runner Up Award). [pdf]

A. Acien, A. Morales, R. Vera-Rodriguez, I. Bartolome and J. Fierrez, “Measuring the Gender and Ethnicity Bias in Deep Models for Face Recognition“, in Proc. of IAPR Iberoamerican Congress on Pattern Recognition (CIARP), Springer, pp. 584-593, Madrid, Spain, November 2018. [pdf]

M. Gomez-Barrero, J. Galbally, A. Morales and J. Fierrez, “Privacy-Preserving Comparison of Variable-Length Data with Application to Biometric Template Protection“, IEEE Access, vol. 5, pp. 8606-8619, June 2017.